Reversible GANs for Memory-efficient Image-to-Image Translation

Tycho F.A. van der Ouderaa Daniel E. Worrall

University of Amsterdam

In CVPR 2019

Paper | Code

Abstract

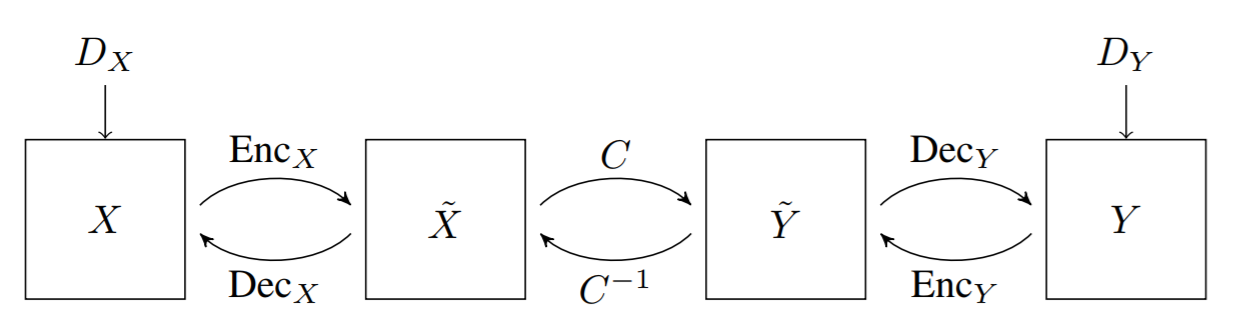

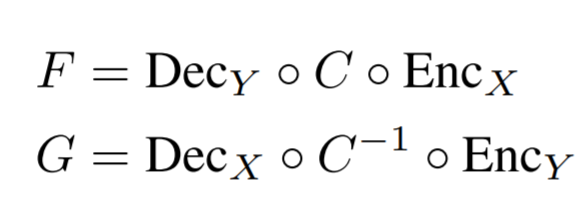

The Pix2pix and CycleGAN losses have vastly improved the qualitative and quantitative visual quality of results in image-to-image translation tasks. We extend this framework by exploring approximately invertible architectures in 2D and 3D which are well suited to these losses. These architectures are approximately invertible by design and thus partially satisfy cycle-consistency before training even begins. Furthermore, since invertible architectures have constant memory complexity in depth, these models can be built arbitrarily deep. We are able to demonstrate superior quantitative output on the Cityscapes and Maps datasets at near constant memory budget.

Paper

arXiv:1902.02729, 2019Citation

Tycho F.A. van der Ouderaa, Daniel E. Worrall. "Reversible GANs for Memory-efficient Image-to-Image Translation", in IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

title={Reversible GANs for Memory-efficient Image-to-Image Translation},

author={van der Ouderaa, Worrall, Daniel E},

booktitle={CVPR 2019},

year={2019}

}

Code

A PyTorch implementation has been made available in this Github repository.For questions about the code, please create a ticket on the github project or contact Tycho van der Ouderaa.

Related Work

Acknowledgements

We grateful to the Diagnostic Image Analysis Group (DIAG) of the Radboud University Medical Center, and in particular Prof. Dr. Bram van Ginneken for his collaboration on this project. We also thank the Netherlands Organisation for Scientific Research (NWO) for supporting this research and providing computational resources.